Concurrency in Swift (Grand Central Dispatch Part 1)

In this part we will cover following topics

- Need of concurrency

- What is Concurrency and Parallelism

- Some Points about Thread

- What is Grand Central Dispatch

- How to perform Concurrency / Parallelism With and Without GCD

- What are the Developer Challenges Without GCD and How GCD Solves it

- Developer Responsibility With GCD

- GCD Benefits

- Dispatch Queues and its types

- Difference b/w Custom & Global Concurrent Queue

- Synchronous vs. Asynchronous Dispatching of Queues

- Some examples of code

Why Concurrency

Suppose you are on on main thread and you need data from server. You requested data from server and waits until you get the response from server. During this duration your main thread will not perform any UI related work which makes your application unresponsive. Let’s say server is taking 10 seconds to give the response during this duration if user taps on button it will not respond to it which is very annoying to user

What if you run these two tasks at the same time or approx at the same time (context switching) so that one thread is dedicated to only UI work and other thread is busy on the task which takes time to process. Before going further first understand some concept

Concurrency

Concurrency means that an application is making progress on more than one task at the same time (concurrently) using time slicing. If the computer only has one CPU, then the application may not make progress on more than one task at exactly the same time, but more than one task is being processed at a time inside the application using the technique called context switching. It doesn’t completely finish one task before it begins the next.

For the case we discussed if we execute network call using Concurrency then there will be two threads main and background executing instruction using context switching. The duration in which the processor is executing network call main thread will not doing any task since this duration is very small you even will not notice that two threads are running concurrently

Parallelism

Parallelism is the notion of multiple things happening at the same time (no context switching).

For the case we discussed if we execute network call Parallely then there will be two threads main and background executing instruction on two different cores which is very fast as compared to previous one but it requires extra physical requirement

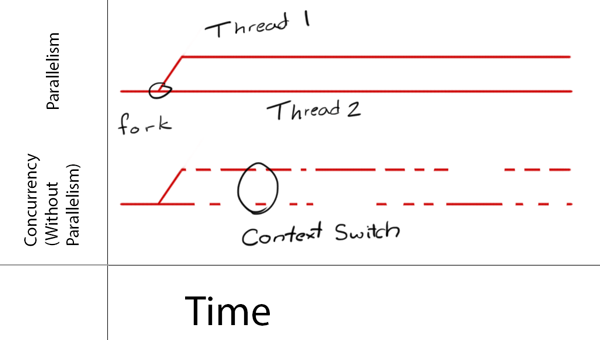

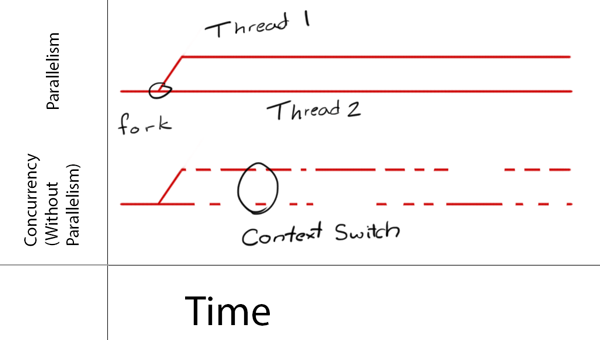

As shown below in the figure on Parallelism there are two threads continuously executing tasks whereas in Concurrency when Thread 1 is doing task Thread 2 is idle

Some Concepts About Thread

- On single core if you create 10 threads it will run on same core using concurrency / time slicing / context switching

- On 10 cores machine if you create 10 threads there may be chance that all will execute on 1 core using context switching / each will execute on different core using and perform parallelism / some execute concurrently and some execute parallely

- If you create 1000 of threads on a single core machine then it will do only context swicthhing little bit task will it perform so creating an optimal number of threads is a challenge

Grand Central Dispatch (GCD)

Grand Central Dispatch or GCD is a low-level API for doing Concurrency / Parallelism in your application.

How GCD perform Concurrency / Parallelism

GCD under the hood manage a shared thread pools and add optimal number of threads in that pool. With GCD you add blocks of code or work items to queues and GCD decides which thread to execute them on. GCD executes this task either concurrently or parallely depending upon the system physical condition or current load.

Note: If you give two tasks to GCD you are not sure whether it will run concurrently or parallely.

From now onwards we will use term Concurrency means (either Concurrency / Parallelism)

Without GCD

In the past we do concurrency using manually creating threads. We create a thread on some core and give the task to run. Threaded solutions is a low level solutions and must be managed manually.

Developer Challenges Without GCD

- It’s developer responsibility to decide optimal number of threads for an application because if you create thousands of threads most of the time it will do context switching instead of doing actual work Optimal number of threads for an application can change dynamically based on the current system load.

- Synchronization mechanisms typically used with threads add complexity

- It’s developer or application responsibility to make use of the extra cores more effectively.

- Number of cores that can be used efficiently, which is a challenging thing for an application to compute on its own

How GCD Solves

- Now it’s GCD responsibility to create optimal number of thread as discussed above GCD under the hood manage a shared thread pools and add optimal number of threads in that pool.

- GCD moved thread management code down to the system level because system can better use cores efficiently as compared to single application

Developer Responsibility With GCD

All you have to do is define the tasks you want to execute concurrently and add them to an appropriate dispatch queue. GCD takes care of creating the needed threads and of scheduling your tasks to run on those threads super cool 😃

GCD Benefits

- It provide you simple programing interface. (Swift)

- Automatic thread pool management

- Provide speed as compared to manually creating and managing thread

- Don’t trap kernel under the load

- Dynamic thread scaling on the basis of current system loads

- Memory efficient since thread stacks don’t in application memory it is in system memory

- Better use cores efficiently because these logic moves to system level

Dispatch Queues

Dispatch queues are a C-based mechanism for executing custom tasks. Dispatch queue always dequeues and starts tasks in the same order in which they were added to the queue.) Dispatch queues are thread-safe which means that you can access them from multiple threads simultaneously. Dispatch Queue is not Thread

If you want to perform concurrent task through GCD you add them to an appropriate dispatch queue. GCD will pick the task and execute them on the basic of the configuration done on the dispatch queues.

Dispatch queue is the core of the GCD. On the basis of Dispatch queues configuration GCD pick and execute concurrent tasks.

Serial Dispatch Queue

- Serial dispatch queues (also known as private dispatch queues)

- Execute one task at a time in the order in which they are added to the queue. Let’s say if you added five tasks to serial configured dispatch queue GCD will start from first task and execute it until the first task is not completed it will not pick second task and so on

- Serial queues are often used to synchronize access to a specific resource. Let’s say you have two network calls both will take 10 seconds so you decided to move these two tasks on some background threads also both are accessing same resource you want to do some synchronization what you can do you put theses tasks on serial queue and after first network call will complete second network will not execute

- Serial queue perform task serially means at a time only one thread is using but they are not guaranteed to perform on the same thread (This is the question that was asked by someone in an interview to me “)

- You can create as many serial queues as you need, and each queue operates concurrently with respect to all other queues. (helpful for implementing concurrent dependent calls will see in second part ). In other words, if you create four serial queues, each queue executes only one task at a time but up to four tasks could still execute concurrently, one from each queue.

- If you have two tasks that access the same shared resource but run on different threads, either thread could modify the resource first and you would need to use a lock to ensure that both tasks did not modify that resource at the same time. With dispatch queues, you could add both tasks to a serial dispatch queue to ensure that only one task modified the resource at any given time. This type of queue-based synchronization is more efficient than locks because locks always require an expensive kernel trap in both the contested and uncontested cases, whereas a dispatch queue works primarily in your application’s process space and only calls down to the kernel when absolutely necessary.

Concurrent Dispatch Queue

- Concurrent queues (also known as a type of global dispatch queue) execute one or more tasks concurrently,

- If you added four separate tasks to this global queue, those blocks will starts tasks in the same order in which they were added to the queue. GCD pick first task execute it during some time and then start second tasks without waiting for first task to complete and so on. This is ideal where you really want not only background execution, but don’t care if these blocks also run at the same time as other dispatched blocks

- The currently executing tasks run on distinct threads that are managed by the dispatch queue.

- The exact number of tasks executing at any given point is variable and depends on system conditions. Someone ask me question in an interview when you create concurrent queue with four tasks how many thread will it create so the answer we are not sure how many thread will GCD use to execute these tasks it depends upon the system condition there is a possibility that it can use one thread or four

- In GCD, there are two ways I can run blocks concurrently either creating custom concurrent queue or using global concurrent queue. We will be doing many experiments later

Difference b/w Custom & Global Concurrent Queue

As shown in Figure 1 we created two concurrent queues and you can see since Global queques are Concurrent queues that are shared by the whole system it always return the same queue whereas custom concurrent queue is private returning new queue every time you create it.

There are four global concurrent queues with different priorities When setting up the global concurrent queues, you don’t specify the priority directly. Instead you specify a Quality of Service (QoS) which includes User-interactive,User-initiated, Utility and Background with the User-interactive has highest priorities and Background with the least. You can see when to use from this link

The following tasks that you can perform by using custom queues as compared to global queues

- You can Specify a label that’s meaningful to you for debugging on custom queue

- You can Suspend it

- Submit barrier tasks

Main Dispatch Queue

- The main dispatch queue is a globally available serial queue that executes tasks on the application’s main thread

- This queue works with the application’s run loop (if one is present) to interleave the execution of queued tasks with the execution of other event sources attached to the run loop. Because it runs on your application’s main thread, the main queue is often used as a key synchronization point for an application.

Synchronous vs. Asynchronous

We have learned how we can execute tasks on queue serially or concurrently With GCD, you can dispatch a queue either synchronously or asynchronously as well.

In general synchronous function returns control to the caller after the task completes if you dispatch queue sync until and unless all the tasks in the queue completed and queue is empty it will not return to the caller.

In contrast asynchronous function returns immediately to the caller before the task completes if you dispatch queue async it will not waits for the tasks in the queue executes or not and immediately return to the caller

As shown in Figure 2 you execute your time consuming tasks on concurrent global queue still your main thread is busy since you dispatch concurrent global queue sync on main thread main and it will wait until you execute all tasks on that queue. These instructions will executes serially as shown in Figure 3

As shown in Figure 4 we dispatch queue async now it immediately return to the main thread and main thread will print first and since these are global concurrent queue tasks on these queues will execute concurrently.

Let’s put in some use case

- Compiler execute first global queue since it async it returns control to main thread immediately and GCD will get the thread and execute first global queue tasks on that thread concurrently

- Compiler execute second global queue since it async it returns control to main thread immediately and GCD will get the thread and execute second global queue tasks on that thread concurrently

- Compiler execute print instruction that was on main thread serially

As shown in Figure 5.1 you just created deadlock outer block is waiting for the inner block to complete and inner block won’t start before outer block finishes

In concurrent computing, a deadlock is a state in which each member of a group is waiting for another member, including itself, to take action,

As shown in Figure 5.2 this will also create a deadlock since we are on the main queue which is serial and we call sync on the same queue.

As shown in Figure 6 we created private/custom serial queue and add two tasks to it since the queue is serial both tasks will execute serially when first task will finish second task then start as shown below

Note: We are dispatching queue async to not to block main thread

As shown in Figure 7 we created private/custom concurrent queue and add two tasks to it since the queue is concurrent both tasks will execute concurrently as shown below

Note: We are dispatching queue async to not to block main thread

As discussed above if you create four serial queues, each queue executes only one task at a time but up to four tasks could still execute concurrently, one from each queue as shown in Figure 8

DispatchWorkItem

DispatchWorkItem is used to store a task on a dispatch queue for later use, and you can perform operations several operations on it, you can even cancel the task if it is not required later in the code.

As shown below code, we started with creating a queue, and then we created our DispatchWorkItem object with a single line of code waiting to be executed.

Next, we created two tasks before cancelling the work item and then we created another task after cancelling the work item.

But, if you see the output, only one task is executed, the rest of them were cancelled as Task 2 was supposed to execute after a second, and Task 3 was initialized after cancelling the work item.

let queue = DispatchQueue(label: "com.swiftpal.dispatch.workItem")

// Create a work item

let workItem = DispatchWorkItem() {

print("Stored Task")

}

// Task 1

queue.async(execute: workItem)

// Task 2

queue.asyncAfter(deadline: DispatchTime.now() + 1, execute: workItem)

// Work Item Cancel

workItem.cancel()

// Task 3

queue.async(execute: workItem)

if item.isCancelled {

print("Task was cancelled")

}

/* Output:

Stored Task

Task was cancelled

*/What’s next

In the next part we will cover other GCD components.

Useful links

https://medium.com/@ellstang/sync-queue-vs-async-queue-in-ios-c607c201ba45

https://www.swiftpal.io/articles/what-is-dispatchworkitem-in-gcd-grand-central-dispatch-swift